Rebirth: I Want to Learn Distributed Systems Series Part One.

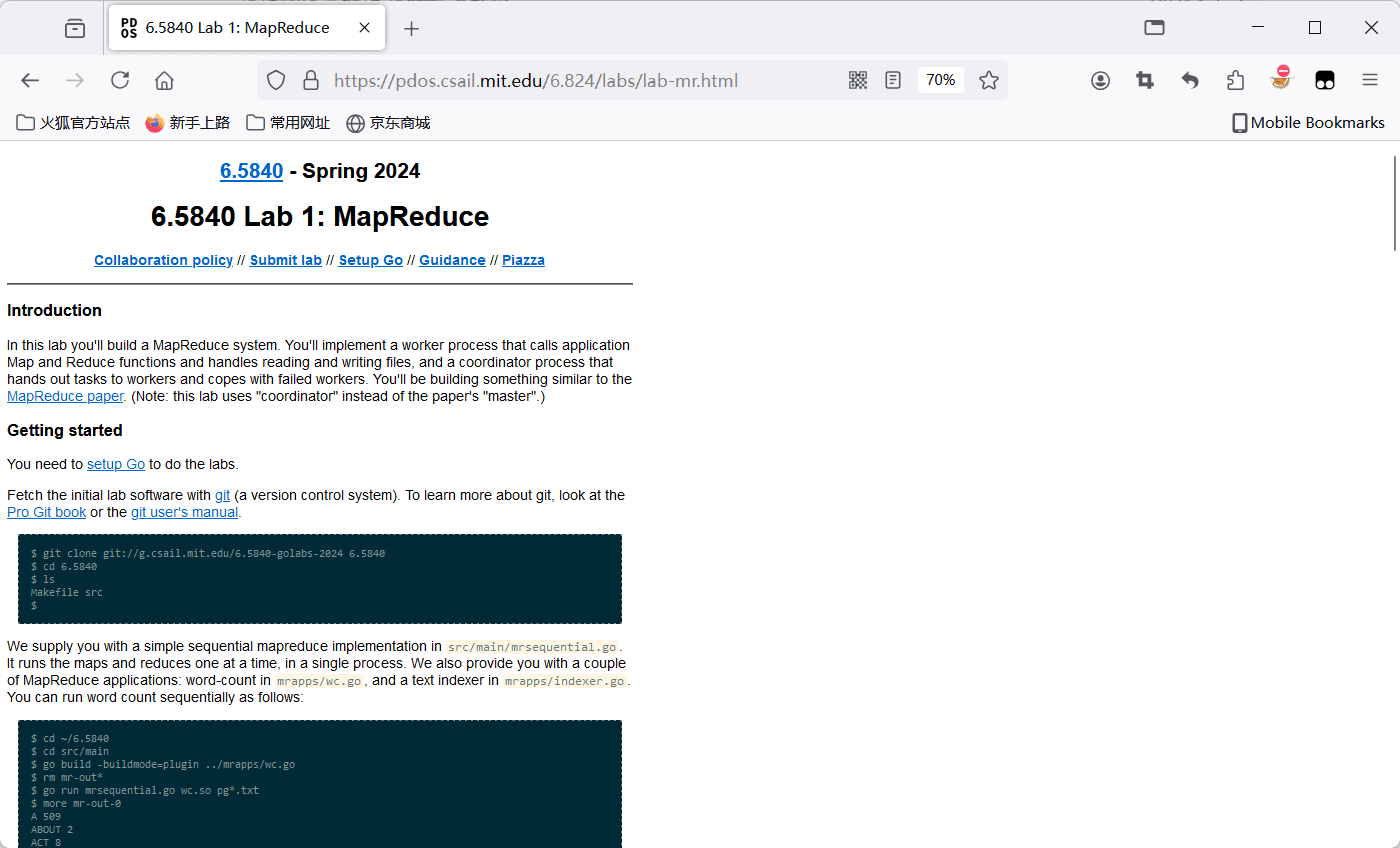

Course Homepage:

Star Course MIT 6.5840: Distributed Systems, originally named 6.824, has assigned a total of 5 labs in 2024. These labs progress step by step, starting from the traditional distributed MapReduce, then implementing Raft from scratch, and finally creating a distributed database similar to TiKV. The whole process gives a sense of satisfaction akin to starting with a needle and manually crafting Windows.

How to Prevent and Treat Cervical Spondylosis#

Just like the first challenge in a certain domestic AAA game is to decompress the game, the first challenge of this course is to produce documentation that causes cervical spondylosis.

Due to the document being biased to the left, consider centering it horizontally in the console.

const body = document.body;

body.style.maxWidth = "800px";

body.style.marginLeft = "auto";

body.style.marginRight = "auto";

const elements = body.getElementsByTagName("*");

for (let i = 0; i < elements.length; i++) {

elements[i].style.maxWidth = "100%";

elements[i].style.boxSizing = "border-box";

}

Brief Introduction to Lab Content#

Lab 1 is a relatively independent appetizer, aiming to implement a MapReduce framework that Google once used.

Lab 2 implements a simple monolithic KV server, ensuring that the service does not fail even in unstable network conditions.

Lab 3 is to implement the Raft protocol.

Lab 4 is to build a KV server cluster based on the Raft protocol implemented in Lab 3, ensuring fault tolerance on the basis of Lab 2 using the Raft protocol.

Lab 5 requires dividing the database data into several shards to lighten the load on a single server, needing to implement their data migration.

I personally believe that the two most challenging labs are obviously Lab 3 and Lab 5. Lab 3 requires careful study of the original Raft paper, while Lab 5 requires designing the entire architecture from the start, supplemented by online searches for related implementation ideas to get started.

Debugging Tips#

I personally think that the most challenging part of implementing these labs is not in design or coding, but in debugging. Traditional breakpoint debugging methods operate on a monolithic application, while this lab faces a cluster system, making breakpoint methods difficult to operate, so it mainly relies on print statements. Therefore, designing a good print function is very necessary, for example, abstracting the print function. For Lab 5, I used a function to wrap println like this:

func (kv *ShardKV) SSPrintf(format string, a ...interface{}) (n int, err error) {

if SDebug {

log.Printf("[server %d]"+format, append([]interface{}{kv.gid}, a...)...)

}

return

}

kv.gid is one of the identifiers related to ShardKV, and in practice, more basic information can be included, although this may need to consider whether obtaining this information will cause read-write race conditions.

In this experiment, I only used SDebug to determine whether to print logs, but in reality, a level-like system can be designed to conveniently distinguish between important logs and less important logs.

When analyzing bug logs, the Linux tee command can be used to output logs to both stdout and a file simultaneously.

go test -race -run TestConcurrent3_5B | tee bug.txt

In places where logs need to be output, additional conditional checks can be added to confirm the conditions under which bugs may occur, thus reducing a large amount of meaningless logs.